How Generative AI Has Changed the Data Analytics Landscape and How It Hasn't Yet

AI has gotten amazing at answering our nuanced questions, but what about the "simple" questions we're still terrible at answering for ourselves?

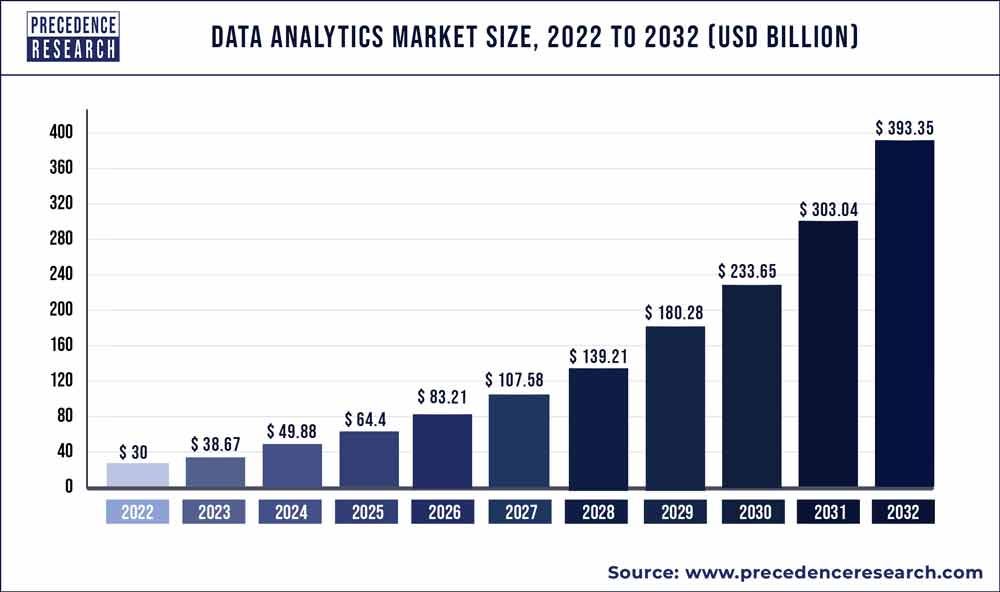

The data analytics software market is big and growing fast. It’s on pace to grow 1,000% over the next decade to ~$400B / year according to some.

I cut my teeth as a product manager in predictive analytics, working in the space for almost a decade. It’s safe to say that a good chunk of that forecasted growth will stem from AI-related technologies, though you don’t need any industry expertise to divine that nugget of insight.

Until recently, I believed my most significant insight about predictive analytics products to be this:

Businesses pay people for advice and software for answers.

The more you try to provide advice through analytics tools, the harder success becomes.

I’ll explain my reasoning as succinctly as I can, with the caveat that the times, they are a changin'.

The ROI Problem for Analytics

The path to success gets narrower for products as they venture further into advice territory because of the ROI question in software. But let’s start with what makes the advice business different from the software business.

When businesspeople face important questions with no objectively correct answer, they want advice. They turn to people they trust and respect for it. When the needed expertise isn’t in the building, companies hire consultants and advisors to help them navigate the tough issues.

No matter how much money a company spends on advisory services, they almost never think about the value they get back in ROI terms. This is key. Rather, they ask questions like:

Did they deliver what they promised?

Did the deliverables meet our expectations?

Were they easy to work with?

Would we hire them again?

They ask qualitative questions because there’s an implicit understanding shared by all that advice defies conventional value measures like ROI. Serious advice on complex matters involves nuance, as does how the company chooses to act on it. Hence, the folks signing the checks dispense with ROI for evaluating professional services.

Software on the other hand gets absolutely no such benefit of the doubt.

Business buyers expect demonstrable ROI from software - analytics tools included. Anything more sophisticated than a spreadsheet must show tangible ROI to customers or risk churning.

Pure play analysis and dashboard tools usually try to demonstrate ROI in terms of productivity. Business users constantly need information to make decisions and get work done — how many units shipped, how many users converted, what’s our pipeline coverage, etc. If people can get those answers for themselves, everyone’s making good decisions more quickly.

Predictive analytics tools also go down the productivity and efficiency route to prove ROI, by automating or optimizing processes for instance. What distinguishes predictive analytics tools is their ability to generate ROI by directly impacting customer outcomes in revenue and cost terms. Whether maximizing clicks on digital ads, recommending upsell products, or any of countless other examples, these products have a stronger ROI case all else equal.

A key thing to understand about those predictive models is that they’re most succesful when operating in narrow contexts. They are impact specific business outcomes in specific cases and do it extremely well.

Where predictive analytics products often run into trouble, as I learned, is when they try to branch out to use cases for which they’re not well suited and for which proving ROI is murky.

There are already plenty of basic analyics tools and no small number of predictive analytics tools for well-established use-caes, so predictive analytics companies hunt for the next frontier. They build fancier ($$$) models data products aimed at users who deal with much more nuanced, complicated busines questions than recommending upsell products before the checkout page.

Even if the new tool is compelling, the problem is that their target users are more inclined to spend budget on advisors or hiring people to solve these problems than they are to spend it on an expensive, novel, untested software product.

I’d felt good about that little thesis for years — right up until ChatGPT came along, kicked in the door, and turned everything I thought I knew on its head.

Almost.

Generative AI Instantly Changed What We Think Software Can Do Well

Let’s start with what obviously has changed dramatically - AI’s ability to answer abstract questions with a level of humanoid clarity that had been science fiction prior to ChatGPT. It’s an epochal achievement in the history of human technology.

Within a month of its launch, practically every software company in every vertical was scrambling to figure out its “AI strategy” at the same time. The thing in my career that I can compare this moment in time to is when the first iPhone came out and we all suddenly needed to figure out our “app strategies.”

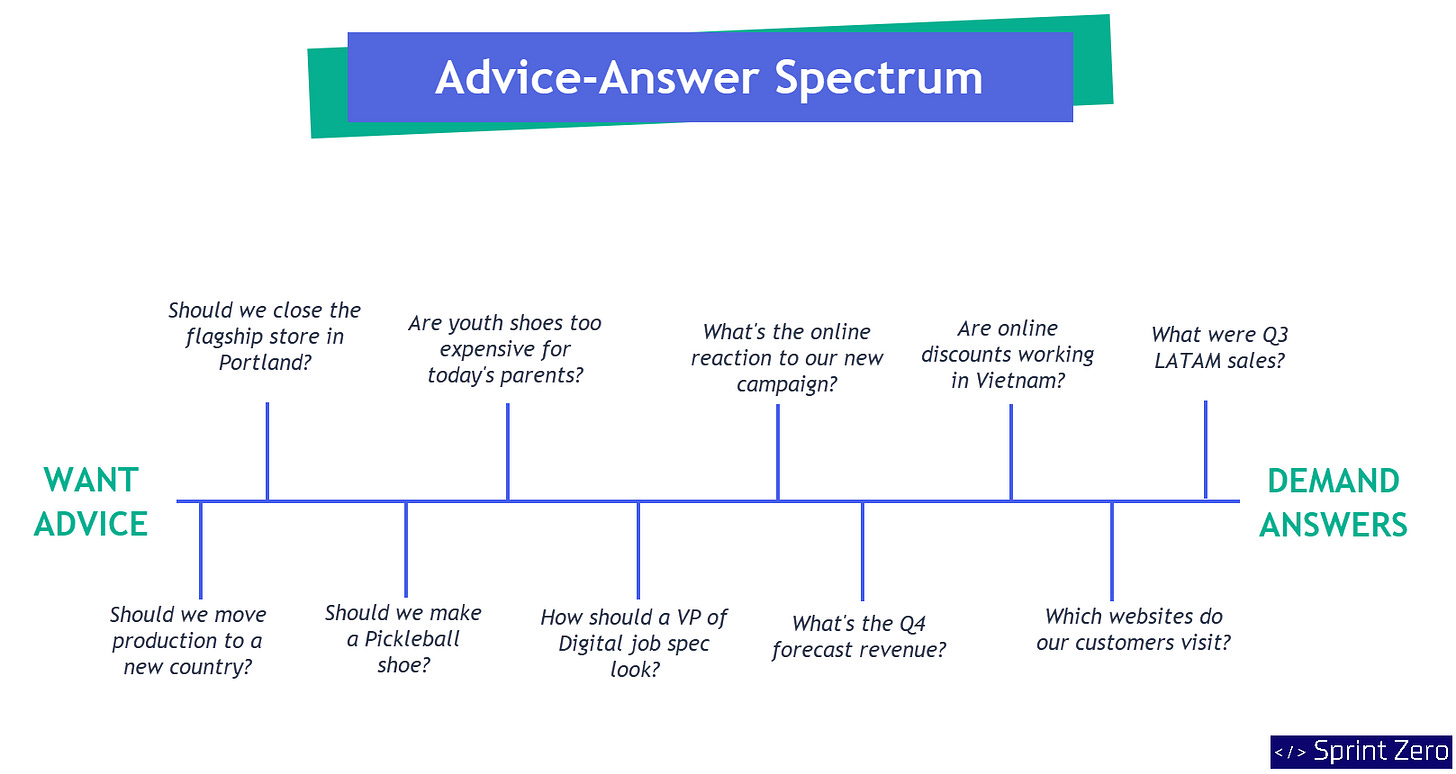

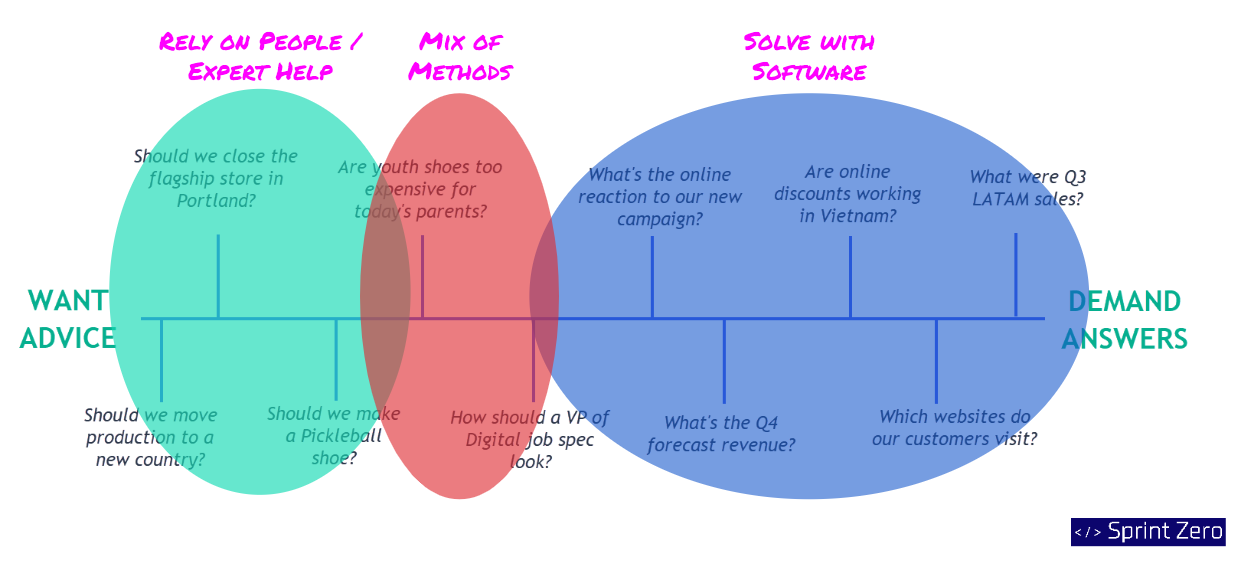

I picture all business questions sitting somewhere along what I call the Advice-Answer Spectrum. The abstract questions with multiple angles and no objectively right answer lie near the Advice end. At other end are questions with binary or factual answers that can be calculated deterministically

I’ve plotted a few example questions along the spectrum that an executive at Nike might face. I’m using for the sole reason (no pun intended) that I’m wearing their shoes as I write this:

Everything around the middle of the spectrum can go either way depending on individual preferences and circumstances.

Questions and problems near the middle are the ones for which people are more open to experimenting with new approaches. I might delegate one of these to a junior team member to see how they respond, or I might use one as an opportunity to test a new product.

From a user psychology standpoint, there are two things about ChatGPT that really stand out to me:

ChatGPT was such a ridiculously huge improvement to chatbots that it skipped the early adopter phase. Despite requiring users to adjust how they do something familiar (looking up things on the internet), they achieved immediate widespread adoption.

Due to #1, business users started applying AI to questions and use-cases that they’d never imagined could be addressed with software

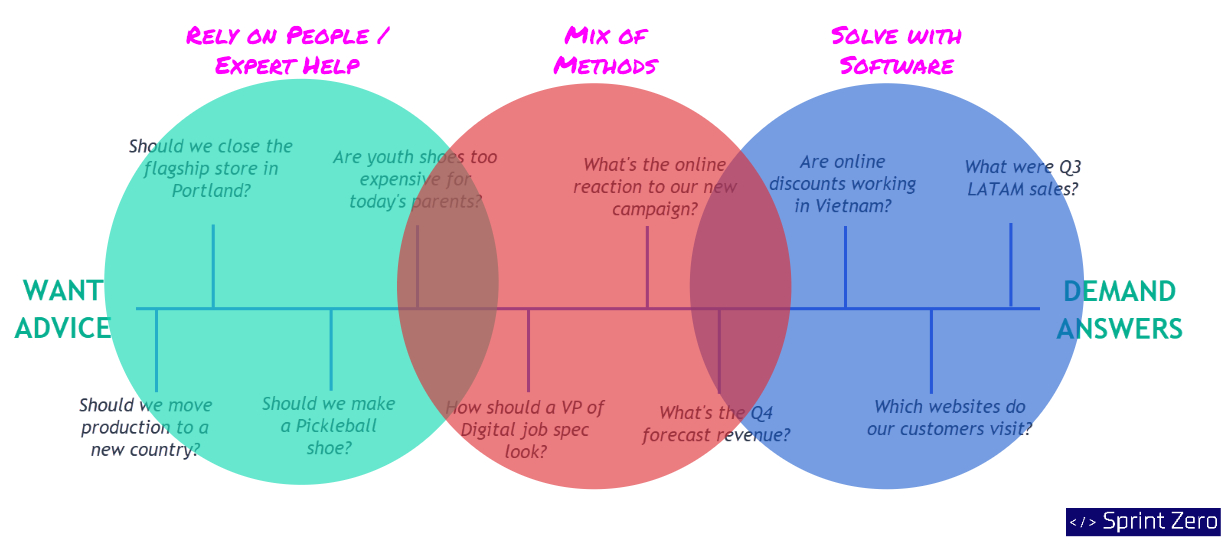

Generative AI apps are now eating the middle of the Advice-Answer Spectrum. It’s also compressing the expert advice region too, because a less experienced employee armed with AI is becoming a reasonable option for more use-cases.

If the distribution of how businesses solve problems used to look like this,

it’s fast evolving into this.

There’s nothing new about technology replacing humans for some tasks and making them more productive at others. But generative AI has effected an sea change virtually overnight with regards to our beliefs about the degree of task complexity that software can handle well.

Also, every analytics software vendor today still talks about how they shorten the time from data to insight to action. But generative AI tools take this to previously unimagined levels.

Answering big important questions has always been an iterative, multi-step process: we locate the data we need, run the analysis, interpret the results, synthesize the findings into documentation, and finally make a decision. But generative AI does most of that in a single step that takes just a few seconds. The lines between these discrete steps and and the dedicated tools we use to do them suddenly seem fuzzy because of how AI solves these problems.

Personally, I don’t think the category distinctions that we’re used - analytics tools, documentation tools, presentation tools, etc. - will still be relevant a few years from now.

So much has changed and will continue to change in the months and years ahead. But there’s at least one aspect of data analytics that generative AI hasn’t affected much yet as far as I can tell.

What Generative AI Hasn’t Changed Yet for Analytics

For all the ways that generative AI has expanded the software frontier to new problems, I don’t think it’s transformed how we answer the supposedly simpler business questions at the ‘Answer’ end of the spectrum to anything like the same degree.

I could be wrong, but I haven’t seen much impact at all in this are, and at first glance that’s counterintuitive. Generative AI is so powerful, after all. Shouldn’t it have transformed how we do plain old data analysis too?

Yes and no.

While I’m not saying AI can’t do for basic analysis what it’s done for other use-cases, there are some good reasons why it hasn’t yet.

The Simplest Questions Can Be the Hardest to Answer

The other day, a friend of mine, an executive at a geospatial analytics startup, had to create a job description for a role she was unfamiliar with and has never hired for in her career. So she turned to chatGPT. In less than an hour, she went from having no idea what to include to sending a finished product for her CEO.

AI for the win, for sure…but consider how strange my friend’s situation is for a moment:

She used an AI tool to create a competent deliverable on an unfamiliar subject in a matter of minutes. Yet despite being deeply knowledgeable about her company’s business model, past performance, and everything she needs significant help to extract even basic information from company’s raw data.

Unless someone else has already created the exact view of the data she needs, she’s SOL.

That juxtaposition is bonkers to me, and yet it also makes sense:

My friend doesn’t know SQL, not that it would help much if she did. Her company is typical in that all of the business data lives in an idiosyncratically organized data warehouse with thousands of tables, cryptic field labels, incomplete information, inconsistent metric definitions over time — all the same data maladies that your company has too.

The unique craziness of every company’s data so far seems to have kept generative AI from making what should be simple actually simple.

Will it help us solve this problem someday? I think so and have thought about what that could look like, but it’s time to hit publish.

Til next time

If you’ve seen tools that address this or you’re working on one, let’s talk please christian.m.bonilla@gmail.com

Great post Christian. It seems like for some orgs (like my own), as soon as there's a comfort level in being able to use the prompt-friendly tech on our own data we could see a massive jump for our internal BI/analytics departments. I think it's one part not wanting to bulk up staffing on the roles can do it with more complex tech and a second part not feeling comfortable just dropping years of business data into models where we have no idea who's using them and for what.